# 使用Kubernetes搭建项目实战

# 资源准备

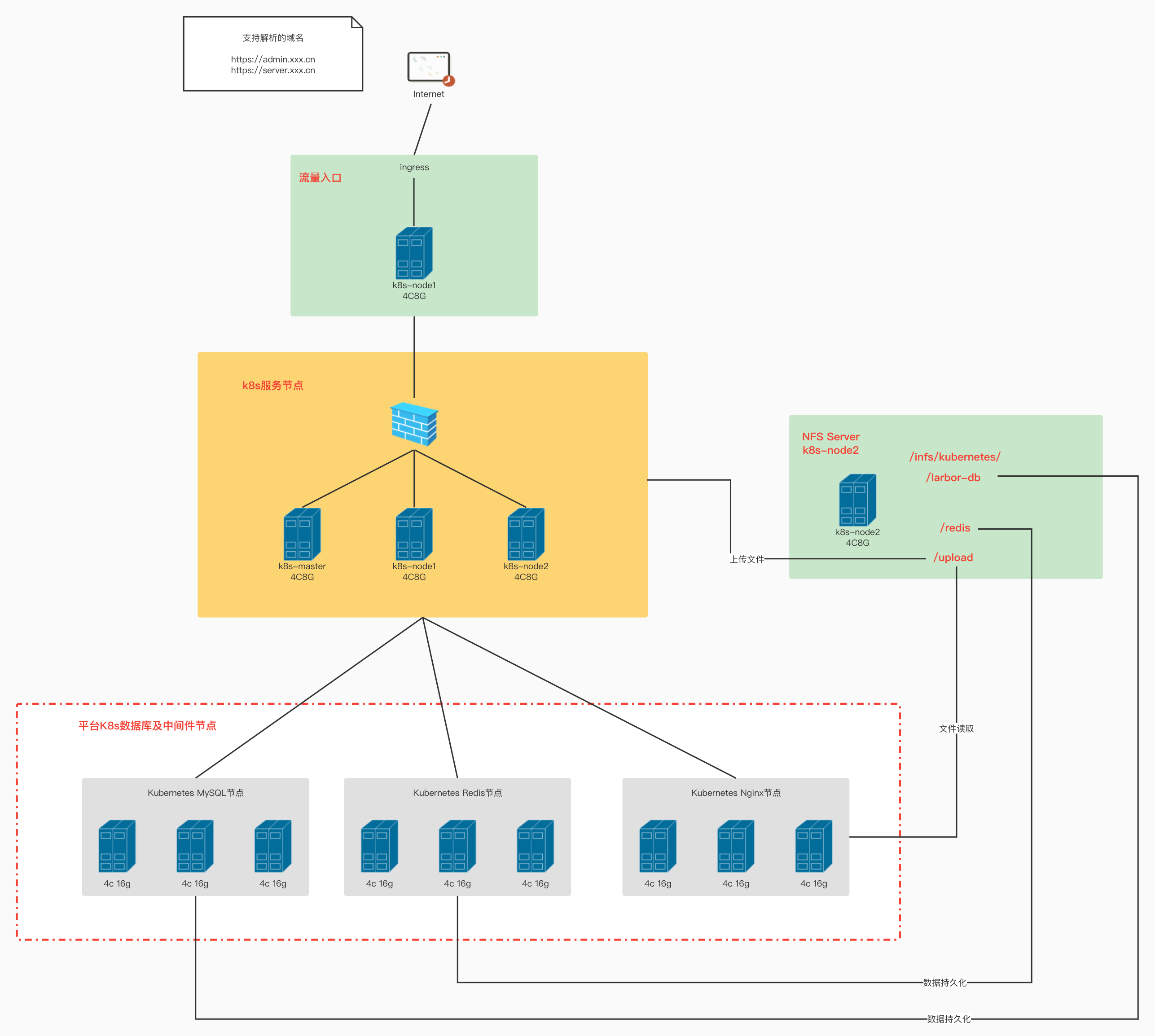

本次部署,使用到服务器一共三台,三台服务器内网互通。仅k8s-node1节点开放公网访问,监听443端口,80端口。

注意:本次全部使用yaml文件部署,文件会在下面给出。如需部署,直接拷贝yaml,简单修改后,执行kubectl apply -f xxx.yaml即可

- 服务器清单

- k8s-master

- k8s-node1

- k8s-node2

- 公网可解析的域名,下面仅为示例,本文也会用示例取代真实的域名

- admin.xxx.cn

- server.xxx.cn

- 域名证书 自己创建或者从第三方CA机构获取

# 本次需要部署的资源清单

- nfs服务:用于磁盘挂载,比较简单的方式

- mysql服务

- java应用服务:应用名称为larbor-server

- 前端应用服务:应用名称为larbor-admin

- redis服务

- nginx服务

- 使用ingress暴露前后端应用服务

# 架构图

# 安装

# 搭建nfs文件服务器

一般来说,为了追求性能,都会在一台新的机器上去安装nfs共享服务器,但我们这里为了学习,就在k8s-node2这个节点上去安装nfs服务器。

# 安装共享服务器

在k8s-node2节点执行如下命令,将文件目录/infs/kubernetes用于共享存储,并在共享目录下创建文件目录/larbor-db,/redis,/upload,用于细分的文件挂载,方便文件管理。

# 安装nfs-utils

$ yum install nfs-utils

# 创建共享目录/infs/kubernetes

$ mkdir -p /infs/kubernetes

# 设置nfs共享目录,*代表所有ip可访问,如果写一个ip,或者网段,那么只有指定的ip/网段可访问,括号中内容为读写权限

# rw: 可读写

# no_root_squash: 当登录NFS主机使用共享目录的使用者是root时,其权限将被转换成为匿名使用者

$ vi /etc/exports

/infs/kubernetes *(rw,no_root_squash)

# 启动nfs

$ systemctl start nfs

# 设置nfs开机启动

$ systemctl enable nfs

# 磁盘挂载并测试

在k8s-master和k8s-node1节点上执行命令,将文件目录/k8s-nfs-mount挂载到共享目录。

注意完成之后一定要进行测试!!!

# 在本机创建需要使用nfs共享服务器的目录

$ mkdir /k8s-nfs-mount

# 将本机的liuxiaolu-test-mount目录挂载到liuxiaolu-node节点的/infs/kubernetes目录

# 注意,liuxiaolu-node是通过本地hosts域名映射到node节点上,如果没有配置域名银映射,这里需要替换为ip地址

$ mount -t nfs k8s-node2: /infs/kubernetes /k8s-nfs-mount

# 打开本机的liuxiaolu-test-mount目录

$ cd /k8s-nfs-mount

# 任意创建一个测试文件, 创建后到共享目录下查看是否有这个文件,如果有即为挂载成功

$ touch liuxiaolu-mount-test.text

# 创建mysql服务

# 第一部分,创建用户凭证

apiVersion: v1

kind: Secret

metadata:

name: larbor-db

namespace: default

type: Opaque

data:

# root密码为123456

mysql-root-password: "MTIzNDU2"

# 普通用户密码为123456

mysql-password: "MTIzNDU2"

---

# 第二部分,创建deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: larbor-db

namespace: default

spec:

selector:

matchLabels:

project: www

app: mysql

template:

metadata:

labels:

project: www

app: mysql

spec:

containers:

- name: db

image: mysql:5.7.30

args: ["--character-set-server=utf8mb4", "--lower_case_table_names=1"]

resources:

requests:

cpu: 500m

memory: 512Mi

limits:

cpu: 500m

memory: 512Mi

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: larbor-db

# 使用初始化凭证 mysql-root-password

key: mysql-root-password

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: larbor-db

# 使用初始化凭证 mysql-password

key: mysql-password

- name: MYSQL_USER

value: "root"

# 默认创建数据库larbor

- name: MYSQL_DATABASE

value: "larbor"

ports:

- name: mysql

containerPort: 3306

livenessProbe:

exec:

command:

- sh

- -c

- "mysqladmin ping -u root -p${MYSQL_ROOT_PASSWORD}"

# 健康检查,启动后30s开始检查

initialDelaySeconds: 30

# 每10s检查一次

periodSeconds: 10

readinessProbe:

exec:

command:

- sh

- -c

- "mysqladmin ping -u root -p${MYSQL_ROOT_PASSWORD}"

# 健康检查,启动后5s开始检查

initialDelaySeconds: 5

# 每10s检查一次

periodSeconds: 10

volumeMounts:

- name: data

# 将路径/var/lib/mysql挂在到卷data

mountPath: /var/lib/mysql

volumes:

# 定义卷data

- name: data

nfs:

server: k8s-node2

# 挂载到共享目录/infs/kubernetes/larbor-db

path: /infs/kubernetes/larbor-db

---

# 为服务创建service,设定为ClusterIP, 即数据库只能在集群内访问,不开放公网

apiVersion: v1

kind: Service

metadata:

name: larbor-db

namespace: default

spec:

type: ClusterIP

ports:

- name: mysql

port: 3306

targetPort: mysql

selector:

project: www

app: mysql

# java应用服务

我这里使用的是打包好的镜像,需要从git仓库下载镜像,而下载镜像需要进行认证,所以预先创建git下载文件的凭证

# 创建凭证

创建一个名称为registry-aliyun-auth,用户名为xxx,密码为xxx,仓库地址为registry.cn-hangzhou.aliyuncs.com的用户凭证

kubectl create secret docker-registry registry-aliyun-auth --docker-username=xxx --docker-password=xxx --docker-server=registry.cn-hangzhou.aliyuncs.com

小tips:关于如何使用凭据

imagePullSecrets:

- name: registry-auth

# 创建deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: larbor-server

name: larbor-server

spec:

# 默认副本数为3

replicas: 3

selector:

matchLabels:

app: larbor-server

template:

metadata:

labels:

app: larbor-server

spec:

imagePullSecrets:

# 使用凭证registry-aliyun-auth下载镜像

- name: registry-aliyun-auth

containers:

# 要部署的镜像地址

- image: registry.cn-hangzhou.aliyuncs.com/liuxiaoluxx/larbor:v1.0

name: larbor-server

resources:

requests:

# 默认一个pod使用1c1g的资源

cpu: 1

memory: 1Gi

limits:

# 限制pod最后使用2c2g的资源

cpu: 2

memory: 2Gi

readinessProbe:

httpGet:

path: /

port: 8082

# 启动50s后开始健康检查,检查的端口是8082,检查的路径是/

initialDelaySeconds: 50

# 每隔10s检查一次

periodSeconds: 10

volumeMounts:

# 磁盘挂载:应用服务会将文件上传到目录/app/upload中

- name: upload

mountPath: /app/upload

volumes:

- name: upload

nfs:

server: k8s-node2

# 磁盘挂载:将应用服务上传到目录/app/upload中的文件挂载到/infs/kubernetes/upload中

path: /infs/kubernetes/upload

---

# 为deployment创建service,并指明为ClusterIP,允许集群内访问,不开放公网

apiVersion: v1

kind: Service

metadata:

labels:

app: larbor-server

name: larbor-server

spec:

type: ClusterIP

ports:

- port: 8082 # service(负载均衡器)端口,只能在k8s集群内部访问(node和pod)

protocol: TCP # 协议

targetPort: 8082 # 容器中服务的端口

selector: # 标签选择器,这里用来关联Pod, 这里是指关联打有标签app=web的pod

app: larbor-server

# 搭建前端应用服务

这里同样使用的是打包好的镜像,需要从git仓库下载镜像,使用上面创建的git下载文件的凭证

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: larbor-admin

name: larbor-admin

spec:

replicas: 1

selector:

matchLabels:

app: larbor-admin

template:

metadata:

labels:

app: larbor-admin

spec:

imagePullSecrets:

- name: registry-aliyun-auth

containers:

- image: registry.cn-hangzhou.aliyuncs.com/liuxiaoluxx/labor-admin:v1.1

name: larbor-admin

resources:

requests:

cpu: 0.5

memory: 500Mi

limits:

cpu: 0.5

memory: 500Mi

---

apiVersion: v1

kind: Service

metadata:

labels:

app: larbor-admin

name: larbor-admin

spec:

type: ClusterIP # 暴露类型

ports:

- port: 80 # service(负载均衡器)端口,只能在k8s集群内部访问(node和pod)

protocol: TCP # 协议

targetPort: 80 # 容器中服务的端口

selector: # 标签选择器,这里用来关联Pod, 这里是指关联打有标签app=web的pod

app: larbor-admin

# 创建redis

# 创建configmap,支持动态调整redis的配置

apiVersion: v1

kind: ConfigMap

metadata:

name: redis-config

data:

redis_6379.conf: |

protected-mode no

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile "/data/redis_6379.log"

databases 16

always-show-logo yes

# requirepass {{ $name.redis.env.passwd }}

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump_6379.rdb

dir /data

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

replica-priority 100

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

rename-command FLUSHALL SAVEMORE16

rename-command FLUSHDB SAVEDB16

rename-command CONFIG UPDATEC16

rename-command KEYS NOALL16

---

# 创建service

apiVersion: v1

kind: Service

metadata:

name: redis

labels:

name: redis

spec:

type: ClusterIP # 如果需要集群外部访问,这里改为NodePort

ports:

- port: 6379

protocol: TCP

targetPort: 6379

name: redis-6379

selector:

name: redis # 这里填写Deployment/metadata/name 的值

---

# 创建deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: redis

name: redis

spec:

replicas: 1

selector:

matchLabels:

name: redis

template:

metadata:

labels:

name: redis

spec:

containers:

- name: redis-6379

image: redis:5.0

volumeMounts:

- name: configmap-volume

mountPath: /usr/local/etc/redis/redis_6379.conf

subPath: redis_6379.conf

- name: data

mountPath: "/data"

command:

- "redis-server"

args:

- /usr/local/etc/redis/redis_6379.conf

volumes:

# 将配置挂载到configmap

- name: configmap-volume

configMap:

name: redis-config

items:

- key: redis_6379.conf

path: redis_6379.conf

- name: data

nfs:

server: k8s-node2

path: /infs/kubernetes/redis

# 部署nginx

# 部署文件

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

name: nginx

spec:

type: ClusterIP # 如果需要集群外部访问,这里改为NodePort

ports:

- port: 80

targetPort: 80

name: nginx-80

selector:

name: nginx # 这里填写Deployment/metadata/name 的值

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

imagePullSecrets:

- name: registry-aliyun-auth

containers:

- name: nginx

image: registry.cn-hangzhou.aliyuncs.com/liuxiaoluxx/larbor-nginx:v1.1

volumeMounts:

- name: data

# 将容器内部的/opt/upload/挂载到共享目录/infs/kubernetes/upload,直接读取共享目录下的文件

mountPath: "/opt/upload/"

volumes:

- name: data

nfs:

server: k8s-node2

path: /infs/kubernetes/upload

# nginx.conf

只做一件事,将/storage/开头的url定位到文件目录/opt/upload/,进行文件读取

#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#开启和关闭gzip模式

gzip on;

#gizp压缩起点,文件大于1k才进行压缩

gzip_min_length 1k;

# gzip 压缩级别,1-9,数字越大压缩的越好,也越占用CPU时间

gzip_comp_level 9;

# 进行压缩的文件类型。

gzip_types text/plain text/html application/javascript application/x-javascript text/css application/xml text/javascript application/json;

#nginx对于静态文件的处理模块,开启后会寻找以.gz结尾的文件,直接返回,不会占用cpu进行压缩,如果找不到则不进行压缩

gzip_static on;

# 是否在http header中添加Vary: Accept-Encoding,建议开启

gzip_vary on;

gzip_proxied any;

# 设置压缩所需要的缓冲区大小,以4k为单位,如果文件为7k则申请2*4k的缓冲区

gzip_buffers 2 4k;

# 设置gzip压缩针对的HTTP协议版本

gzip_http_version 1.1;

server {

listen 80;

server_name server.larbor.xinsanfeng.cn;

location /storage/ {

root /opt/upload/;

autoindex on;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

}

# 使用ingress暴露前后端应用服务

# 创建tls凭据

由于使用的是tls(https)的方式,需要准备证书。

- 创建或者从第三方CA机构获取证书

- 将证书上传到服务器上

- 创建类型为tls,名称为admin.xxx.cn,key为xx.key,cert为xx.pem的凭据。

- 创建类型为tls,名称为server.xxx.cn,key为xx.key,cert为xx.pem的凭据。

注意key和cert指定的均为文件

kubectl create secret tls admin.xxx.cn --key=xx.key --cert=xx.pem

kubectl create secret tls server.xxx.cn --key=xx.key --cert=xx.pem

# 部署ingress-controller

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: lizhenliang/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

---

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app: ingress-nginx

# 部署ingress

注意,ingress节点只会部署在node节点上,默认监听80端口,添加tls配置后,会再监听443端口。

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: larbor-ingress

annotations:

# 允许跨域配置

nginx.ingress.kubernetes.io/cors-allow-headers: "x-larbor-token,content-type"

nginx.ingress.kubernetes.io/cors-allow-methods: "*"

nginx.ingress.kubernetes.io/cors-allow-origin: "*"

nginx.ingress.kubernetes.io/cors-expose-headers: "*"

nginx.ingress.kubernetes.io/cors-max-age: "86400"

nginx.ingress.kubernetes.io/enable-cors: "true"

nginx.ingress.kubernetes.io/cors-allow-credentials: "true"

spec:

tls:

- hosts:

- server.xxx.cn

secretName: server.xxx.cn

- hosts:

- admin.xxx.cn

secretName: admin.xxx.cn

rules:

# 前端应用的请求转发给service larbor-admin处理,处理的端口为80端口

- host: admin.xxx.cn

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: larbor-admin

port:

number: 80

# 后端应用的请求转发给service larbor-server处理,处理的端口为8082端口

- host: server.xxx.cn

http:

paths:

- pathType: Prefix

# 将/storage/开头的url转发给nginx处理,进行文件服务

path: "/storage/"

backend:

service:

name: nginx

port:

number: 80

- pathType: Prefix

path: "/"

backend:

service:

name: larbor-server

port:

number: 8082

# 结束

开放k8s-node1节点的443端口,并添加域名解析admin.xxx.cn, server.xxx.cn到k8s-node1节点,即可访问服务。