# Kubernetes调度

# 创建一个pod的工作流程

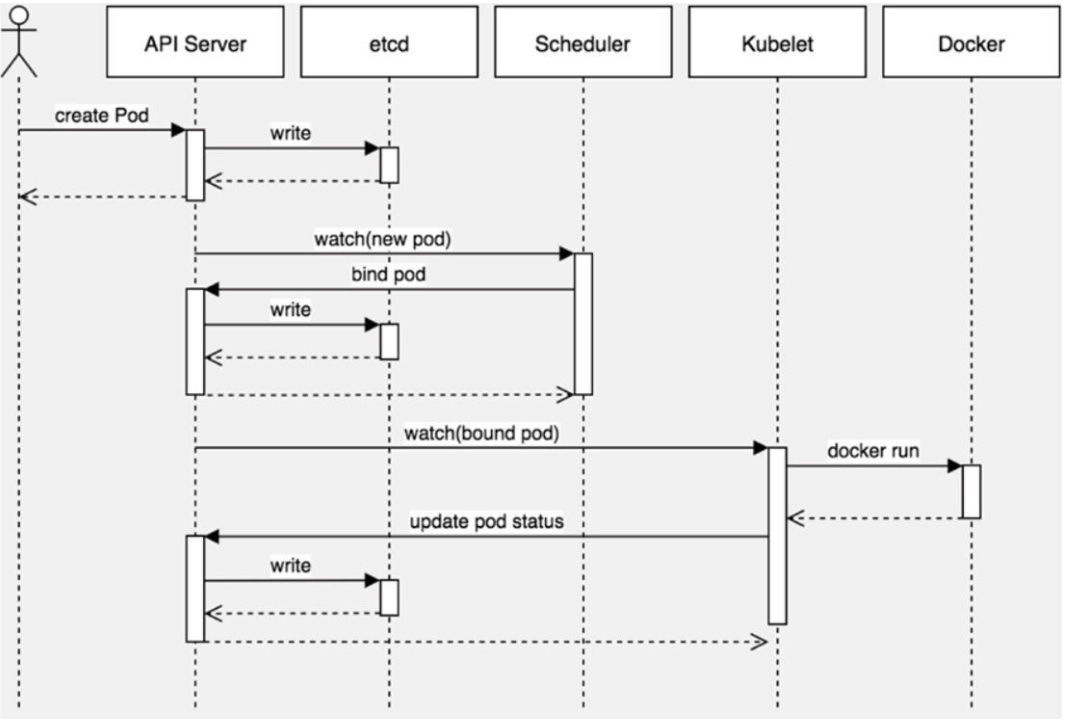

Kubernetes基于list-watch机制的控制器架构,实现组件间交互的解耦。

# 图解

- kubectl run -> apiserver -> etcd -> scheduler -> kubelet -> docker -> container

- kubectl 发起一个创建pod 请求

- apiserver收到创建pod请求,将请求的配置写到etcd

- scheduler通过list/watch获取到pod配置,根据pod配置选择一个合适节点,然后将选择结果返回给apiserver

- kubelet获取绑定到我节点的pod

- kubelet调用容器引擎api创建容器,并将结果反馈给apiserver

# Pod中影响调度的主要属性

- 资源调度依据

- 调度策略

# 资源限制于pod调度的影响

容器资源限制

- resources.limits.cpu

- resources.limits.memory

容器使用的最小资源需求,作为容器调度时资源分配的依据

- resources.requests.cpu

- resources.requests.memory

单位说明

CPU单位:可以写m也可以写浮点数,例如0.5=500m,1=1000m

m 毫核, 1000m等同于1核 0.5 = 500m 1c = 1000m 2c = 2000m内存单位:Mi(数值上等同于MB)

查询node的资源占用情况和已分配情况

kubectl describe node <node名称>

一个简单的限制cpu和内存的yaml

apiVersion: v1 kind: Pod metadata: labels: run: nginx name: nginx spec: containers: - image: nginx name: nginx resources: requests: memory: "128Mi" cpu: "250m" limits: memory: "150Mi" cpu: "300m"- 调度该pod时,需要分配内存128MB,CPU0.25核

- 该pod运行期间,可使用的最大内存为150MB,0.3核CPU

这里需要注意几点

- requests不能大于limits的限制,大于会直接报错

- requests不能大于机器可分配的资源,大于会让pod处于pending状态,无法调度

# nodeSelector & nodeAffinity

# nodeSelector

用于将Pod调度到匹配Label的Node上,如果没有匹配的标签会调度失败。

作用

- 完全匹配节点标签

- 固定Pod到特定节点

给节点打标签

kubectl label nodes [node] key=value

例如: kubectl label nodes liuxiaolu-node env=dev

解释:给liuxiaolu-node这个节点打上key是env,value是dev的tag

# 创建一个pod,并调度到指定标签的yaml示例

- yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-node-selector

spec:

nodeSelector:

env: dev

containers:

- image: nginx

name: nginx

yaml说明

- 创建一个名为nginx-node-selector的pod

- 将pod调度到打有env=dev这个标签的节点

执行一下

$ kubectl label node liuxiaolu-node env=dev

$ kubectl get node -l env=dev

NAME STATUS ROLES AGE VERSION

liuxiaolu-node Ready <none> 47h v1.21.0

$ kubectl apply -f nginx-node-selector.yaml

pod/nginx-node-selector created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 0/1 ContainerCreating 0 24s <none> liuxiaolu-node <none> <none>

- 命令说明

- 给liuxiaolu-node这个节点打上env=dev的tag

- 根据标签筛选,查看liuxiaolu-node这个节点是否已经打上了指定的标签

- 执行nginx-node-selector.yaml创建pod

- 查看新创建的pod是否是按我们所想到调度到了liuxiaolu-node这个节点

- 结果符合预期

# nodeAffinity

节点亲和,类似于nodeSelector,可以根据节点上的标签来约束Pod可以调度到哪些节点。

相比nodeSelector

- 匹配有更多的逻辑组合,不只是字符串的完全相等, 而是根据操作符进行匹配

- 调度分为软策略和硬策略,而不只是硬性要求

- 硬(required): 必须满足

- 软(preferred): 尝试满足,但不保证

操作符:In、NotIn、Exists、DoesNotExist、Gt、Lt

# 硬策略(required)

- yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-require-affinity

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: env

operator: In

values:

- dev

- test

- prod

containers:

- image: nginx

name: nginx

yaml说明

- 创建一个名为nginx-require-affinity的pod

- 该pod将会调度到打有标签env=dev或env=test或env=prod的其中一个节点

执行一下(由于前面已经给liuxiaolu-node这个节点打上了env=dev的标签,这里不再赘述)

$ kubectl apply -f nginx-require-affinity.yaml

pod/nginx-require-affinity created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 1/1 Running 0 15m 10.244.17.171 liuxiaolu-node <none> <none>

nginx-require-affinity 1/1 Running 0 22s 10.244.17.172 liuxiaolu-node <none> <none>

命令说明

- 执行nginx-require-affinity.yaml创建pod

- 查看新创建的pod是否是按我们所想到调度到了liuxiaolu-node这个节点

- 结果符合预期

修改一下这个yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-require-affinity-pending

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: env

operator: In

values:

- test

- prod

containers:

- image: nginx

name: nginx

yaml说明

- 移除env=dev这个tag,我们将不再有node节点符合要求

- 验证结果是否如我们预期的无法调度

执行一下

$ kubectl apply -f nginx-require-affinity-pending.yaml

pod/nginx-require-affinity-pending created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 1/1 Running 0 21m 10.244.17.171 liuxiaolu-node <none> <none>

nginx-require-affinity 1/1 Running 0 6m37s 10.244.17.172 liuxiaolu-node <none> <none>

nginx-require-affinity-pending 0/1 Pending 0 21s <none> <none> <none> <none>

- 命令说明

- 可以看到,新增的pod如我们预期的一样处于pending状态,无法满足硬策略,无法调度

- 结果符合预期

# 软策略(preferred)

- yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-preferred-affinity

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: env

operator: In

values:

- dev

containers:

- image: nginx

name: nginx

yaml说明

- 创建名为nginx-preferred-affinity的pod

- 该pod将优先调度到满足打有标签env=dev的tag的node

执行一下

$ kubectl apply -f nginx-preferred-affinity.yaml

pod/nginx-preferred-affinity created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 1/1 Running 0 27m 10.244.17.171 liuxiaolu-node <none> <none>

nginx-preferred-affinity 1/1 Running 0 22s 10.244.17.173 liuxiaolu-node <none> <none>

nginx-require-affinity 1/1 Running 0 12m 10.244.17.172 liuxiaolu-node <none> <none>

nginx-require-affinity-pending 0/1 Pending 0 6m13s <none> <none> <none> <none>

命令说明

- 可以看到nginx-preferred-affinity依然调度到了liuxiaolu-node这个节点

- 结果符合我们的预期

- 而nginx-require-affinity-pending依然处于pending状态也验证了确实是无法调度,而不是由于等待的时间不够导致没有创建好

和硬策略相同的,还是继续修改一下这个yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-preferred-affinity-running

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: env

operator: In

values:

- prod

containers:

- image: nginx

name: nginx

yaml说明

- 将env=dev的键改为env=prod

- 环境中没有满足env=prod标签的node

- 验证无法匹配之后,pod是否依然可以调度

执行一下

$ kubectl apply -f nginx-preferred-affinity-running.yaml

pod/nginx-preferred-affinity-running created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 1/1 Running 0 33m 10.244.17.171 liuxiaolu-node <none> <none>

nginx-preferred-affinity 1/1 Running 0 5m43s 10.244.17.173 liuxiaolu-node <none> <none>

nginx-preferred-affinity-running 1/1 Running 0 27s 10.244.17.174 liuxiaolu-node <none> <none>

nginx-require-affinity 1/1 Running 0 17m 10.244.17.172 liuxiaolu-node <none> <none>

nginx-require-affinity-pending 0/1 Pending 0 11m <none> <none> <none> <none>

[

- 命令说明

- 可以看到,虽然没有符合tag的node节点,nginx-preferred-affinity-running依然调度成功,处于running状态

- 结果符合预期

# 当pod无法调度之后,给node打上符合要求的标签,pod将可以立即重新调度

给liuxiaolu-node=prod,这符合nginx-require-affinity-pending的调度策略

执行一下

$ kubectl label node liuxiaolu-node env=prod --overwrite

node/liuxiaolu-node labeled

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 1/1 Running 0 40m 10.244.17.171 liuxiaolu-node <none> <none>

nginx-preferred-affinity 1/1 Running 0 12m 10.244.17.173 liuxiaolu-node <none> <none>

nginx-preferred-affinity-running 1/1 Running 0 7m31s 10.244.17.174 liuxiaolu-node <none> <none>

nginx-require-affinity 1/1 Running 0 24m 10.244.17.172 liuxiaolu-node <none> <none>

nginx-require-affinity-pending 0/1 ContainerCreating 0 18m <none> liuxiaolu-node <none> <none>

- 可以看到,nginx-require-affinity-pending已经成功开始调度

# Taints & Tolerations

# Taints

避免Pod调度到特定Node上

应用场景

- 专用节点,例如配备了特殊硬件的节点

- 基于Taint的驱逐

设置污点

kubectl taint node [node] key=value:[effect]

其中[effect] 可取值

NoSchedule: 一定不能被调度。

PreferNoSchedule: 尽量不要调度。

NoExecute: 不仅不会调度,还会驱逐Node上已有的Pod。

查询node上的污点

kubectl describe node | grep Taints

- 去掉污点:

kubectl taint node [node] key:[effect]-

- yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-taints

spec:

containers:

- image: nginx

name: nginx

- yaml说明

- 使用同一个yaml进行测试pod是否能够被调度

# 一定不能被调度(NoSchedule)

- 执行一下

$ kubectl taint node liuxiaolu-node forbid=okay:NoSchedule

node/liuxiaolu-node tainted

$ kubectl apply -f nginx-taints.yaml

pod/nginx-taints created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 1/1 Running 0 69m 10.244.17.171 liuxiaolu-node <none> <none>

nginx-preferred-affinity 1/1 Running 0 41m 10.244.17.173 liuxiaolu-node <none> <none>

nginx-preferred-affinity-running 1/1 Running 0 36m 10.244.17.174 liuxiaolu-node <none> <none>

nginx-require-affinity 1/1 Running 0 53m 10.244.17.172 liuxiaolu-node <none> <none>

nginx-require-affinity-pending 1/1 Running 0 47m 10.244.17.175 liuxiaolu-node <none> <none>

nginx-taints 0/1 ContainerCreating 0 4m54s <none> liuxiaolu-master <none> <none>

- 命令说明

- 给liuxiaolu-node打上forbid=okay的污点,并设置为一定不可调度

- 执行nginx-taints.yaml创建pod

- 查看pod调度情况,pod不再调度到liuxiaolu-node这个节点,而是调度到了其他节点

- 结果符合预期

# 尽量不要调度(PreferNoSchedule)

- 执行一下

$ kubectl taint node liuxiaolu-master forbid=okay:NoSchedule

node/liuxiaolu-master tainted

$ kubectl taint node liuxiaolu-node forbid=okay:PreferNoSchedule --overwrite

node/liuxiaolu-node modified

$ kubectl delete pod nginx-taints

pod "nginx-taints" deleted

$ kubectl apply -f nginx-taints.yaml

pod/nginx-taints created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node-selector 1/1 Running 0 75m 10.244.17.171 liuxiaolu-node <none> <none>

nginx-preferred-affinity 1/1 Running 0 47m 10.244.17.173 liuxiaolu-node <none> <none>

nginx-preferred-affinity-running 1/1 Running 0 42m 10.244.17.174 liuxiaolu-node <none> <none>

nginx-require-affinity 1/1 Running 0 59m 10.244.17.172 liuxiaolu-node <none> <none>

nginx-require-affinity-pending 1/1 Running 0 53m 10.244.17.175 liuxiaolu-node <none> <none>

nginx-taints 0/1 ContainerCreating 0 14s <none> liuxiaolu-node <none> <none>

- 命令说明

- 给liuxiaolu-master节点打上forbid=okay的污点,并设置为一定不可调度

- 覆盖liuxiaolu-node的forbid=okay的污点,更新为尽量不要调度

- 删除已经部署的pod nginx-taints

- 执行nginx-taints.yaml重新部署nginx-taints

- 查看调度结果

- 可以看到,master节点已经被禁用掉,此时将node节点设置为尽量不可调度,pod还是调度到了pod节点,结果符合预期

# 不仅不会调度,还会驱逐Node上已有的Pod(NoExecute)

- 执行一下

$ kubectl taint node liuxiaolu-node forbid=okay:NoExecute --overwrite

node/liuxiaolu-node modified

$ kubectl get pod -o wide

No resources found in default namespace.

$ kubectl apply -f nginx-taints.yaml

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-taints 0/1 Pending 0 9s <none> <none> <none> <none>inx-taints created

- 命令说明

- 更新liuxiaolu-node节点的污点策略,设置为NoExecute

- 查看结果,发现pod被全部驱逐

- 此时在执行nginx-taints.yaml创建pod发现pod无法调度

- 无法调度的原因是因为master节点设置为一定不可调度,node节点设置为不能调度,且驱逐所有node

- 结果符合预期

# Tolerations

允许Pod调度到持有Taints的Node上

- yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-taints-toleration

spec:

containers:

- image: nginx

name: nginx

tolerations:

- key: forbid

operator: "Equal"

value: "okay"

effect: "NoSchedule"

yaml说明

- 创建名为nginx-taints-toleration的pod

- 容忍污点是forbid=okay,且不可调度的节点

执行一下

$ kubectl apply -f nginx-taints-toleration.yaml

pod/nginx-taints-toleration created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-taints 0/1 Pending 0 4m17s <none> <none> <none> <none>

nginx-taints-toleration 0/1 ContainerCreating 0 16s <none> liuxiaolu-master <none> <none>

- 命令说明

- 执行nginx-taints-toleration.yaml创建pod

- 查看执行结果发现,虽然master节点设置为一定不可调度,pod还是被调度到了master节点

- 结果符合预期

# nodeName

前提

- master节点设置污点策略为一定不可调度

- node节点设置污点策略为驱逐pod

yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: nginx-taints-node-name

spec:

nodeName: liuxiaolu-master

containers:

- image: nginx

name: nginx

- 执行一下

$ kubectl apply -f nginx-taints-node-name.yaml

pod/nginx-taints-node-name created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-taints 0/1 Pending 0 9m17s <none> <none> <none> <none>

nginx-taints-node-name 0/1 ContainerCreating 0 2s <none> liuxiaolu-master <none> <none>

nginx-taints-toleration 1/1 Running 0 5m16s 10.244.10.202 liuxiaolu-master <none> <none>

- 命令说明

- 执行nginx-taints-node-name.yaml创建pod

- 查看调度结果发现,虽然master节点设置为不可调度,pod依然调度到了master节点

- 结果符合预期

- 这里多提一句,这是因为指定nodeName的方式,不经过调度器,精准调度到某一节点上,一般不会使用这个策略

# DaemonSet控制器

功能

- 在每一个Node上运行一个Pod

- 新加入的Node也同样会自动运行一个Pod

应用场景

- 网络插件

- 监控Agent

- 日志Agent

通过查看calico的pod验证

- 执行一下

$ kubectl get pod -o wide -n kube-system | grep calico-node calico-node-bdkkr 1/1 Running 0 37h 10.69.1.161 liuxiaolu-node <none> <none> calico-node-hd5mx 1/1 Running 0 2d1h 10.69.1.160 liuxiaolu-master <none> <none>- 命令说明

- 可以看到在master节点和node节点各有一个calico的pod

前提

- 各节点已移除所有污点

yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: nginx

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

status: {}

- 执行一下

$ kubectl apply -f nginx-daemon-set.yaml --validate=false

daemonset.apps/nginx created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-taints 1/1 Running 0 24m 10.244.17.177 liuxiaolu-node <none> <none>

nginx-taints-node-name 1/1 Running 0 15m 10.244.10.203 liuxiaolu-master <none> <none>

nginx-taints-toleration 1/1 Running 0 20m 10.244.10.202 liuxiaolu-master <none> <none>

nginx-wv889 1/1 Running 0 39s 10.244.17.178 liuxiaolu-node <none> <none>

nginx-zmh5x 1/1 Running 0 39s 10.244.10.204 liuxiaolu-master <none> <none>

- 命令说明

- 执行nginx-daemon-set.yaml创建pod并忽略验证

- 查看结果,发现在master和node节点各拉起了一个nginx且后缀为随机数的pod

- 结果符合预期

# 调度失败原因分析

- 查看调度结果

kubectl get pod <NAME> -o wide

- 查看调度失败原因

kubectl describe pod <NAME>

- 常见原因

- 节点CPU/内存不足

- 有污点,没容忍

- 没有匹配到节点标签