# Kubernetes监控与日志

# 查询集群状态

查看master组件状态: kubectl get cs

案例

- 在使用这个命令的时候,我发现了之前搭建的集群的controller-manager组件和scheduler是不健康的状态,信息如下

[root@liuxiaolu-master ~]# kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused etcd-0 Healthy {"health":"true"}- 将controller-manager和scheduler配置文件中的–port=0这行删掉。

[root@liuxiaolu-master ~]# cat /etc/kubernetes/manifests/kube-controller-manager.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: kube-controller-manager tier: control-plane name: kube-controller-manager namespace: kube-system spec: containers: - command: ...... - --node-cidr-mask-size=24 #### 这一行 ↓↓↓↓here↓↓↓↓ - --port=0 - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt ...... [root@liuxiaolu-master ~]# cat /etc/kubernetes/manifests/kube-scheduler.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: kube-scheduler tier: control-plane name: kube-scheduler namespace: kube-system spec: containers: - command: ...... - --leader-elect=true #### 这一行 ↓↓↓↓here↓↓↓↓ - --port=0 ......- 重启kubectl

systemctl daemon-reload && systemctl restart kubelet- 再次查询各组件健康状态,就可以看到各组件已经处于健康状态了

[root@liuxiaolu-master ~]# kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health":"true"}查看node状态: kubectl get node

查看Apiserver代理的URL: kubectl cluster-info

查看集群详细信息: kubectl cluster-info dump

查看资源信息: kubectl describe <资源> <名称>

查看资源信息: kubectl get pod<Pod名称> --watch

查看所有资源: kubectl api-resources

# Metrics-server + cAdvisor 监控集群资源消耗

metrics-server

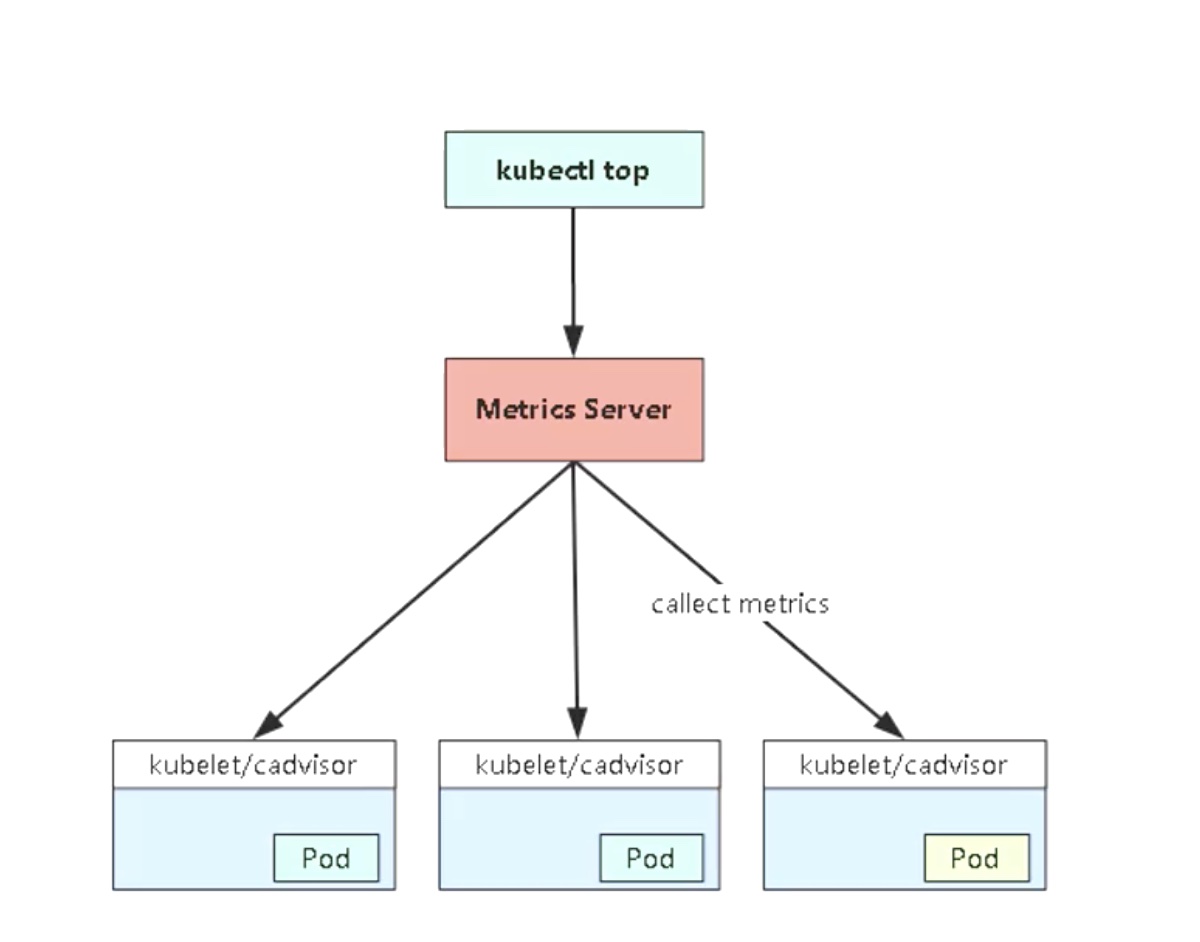

Metrics Server是一个集群范围的资源使用情况的数据聚合器。作为一个应用部署在集群中。Metric server从每个节点上Kubelet API收集指标,通过Kubernetes聚合器注册在Master APIServer中。

cAdvisor

数据汇总

# Metrics Server部署

下载Metrics-server部署的yaml文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.7/components.yaml修改components.yaml文件

vi components.yaml在这里有两个操作

- 修改镜像地址,由于国内访问不了k8s的镜像仓库,在这里,我自己手动拉取了镜像并上传到了我自己的阿里云镜像仓库。

## 替换 k8s.gcr.io/metrics-server/metrics-server:v0.3.7 ## 为 registry.cn-hangzhou.aliyuncs.com/liuxiaoluxx/metrics-server:v0.3.7- 在args中添加两个参数

- name: metrics-server image: registry.cn-hangzhou.aliyuncs.com/liuxiaoluxx/metrics-server:v0.3.7 imagePullPolicy: IfNotPresent args: - --cert-dir=/tmp - --secure-port=4443 # 以下为新增的参数 - --kubelet-insecure-tls #不验证kubelet提供的https证书 - --kubelet-preferred-address-types=InternalIP #使用节点IP连接kubelet执行修改

...... --- apiVersion: apps/v1 kind: Deployment metadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server spec: selector: matchLabels: k8s-app: metrics-server template: metadata: name: metrics-server labels: k8s-app: metrics-server spec: serviceAccountName: metrics-server volumes: # mount in tmp so we can safely use from-scratch images and/or read-only containers - name: tmp-dir emptyDir: {} containers: - name: metrics-server # ↓↓↓↓↓ here ↓↓↓↓↓ # image: k8s.gcr.io/metrics-server/metrics-server:v0.3.7 # 替换为 image: registry.cn-hangzhou.aliyuncs.com/liuxiaoluxx/metrics-server:v0.3.7 imagePullPolicy: IfNotPresent args: - --cert-dir=/tmp - --secure-port=4443 ## 新增参数开始 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP ## 新增参数结束 ports: - name: main-port containerPort: 4443 protocol: TCP securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - name: tmp-dir mountPath: /tmp nodeSelector: kubernetes.io/os: linux ......创建metrics-server

[root@liuxiaolu-master ~]# kubectl apply -f components.yaml clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created Warning: apiregistration.k8s.io/v1beta1 APIService is deprecated in v1.19+, unavailable in v1.22+; use apiregistration.k8s.io/v1 APIService apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created serviceaccount/metrics-server created deployment.apps/metrics-server created service/metrics-server created clusterrole.rbac.authorization.k8s.io/system:metrics-server created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created查看是否部署成功,看到如下信息即是部署成功

[root@liuxiaolu-master ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE metrics-server-76f477d8b4-jlfvl 1/1 Running 0 5m4s [root@liuxiaolu-master ~]# kubectl get apiservices NAME SERVICE AVAILABLE AGE v1beta1.metrics.k8s.io kube-system/metrics-server True 4m38s查看pod监控

[root@liuxiaolu-master ~]# kubectl top pod NAME CPU(cores) MEMORY(bytes) nginx-deployment-64c9d67564-448pm 0m 2Mi nginx-deployment-64c9d67564-nfrmc 0m 2Mi查看node监控

[root@liuxiaolu-master ~]# kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% liuxiaolu-master 411m 10% 1962Mi 25% liuxiaolu-node 253m 6% 1332Mi 17%

# 管理k8s组件日志

# 日志分类

- k8s系统的组件日志

- k8s cluster里面部署的应用程序日志

- 标准输出

- 日志文件

# 日志查看

systemd守护进程管理的组件

journalctl -u [服务名](也可以不指定服务名,直接使用journalctl查询所有systemd守护进程管理的组件)eg:

journalctl -u kubelet使用

-f参数,查询实时日志eg:

journalctl -f -u kubelet

Pod部署的组件

查询pod的日志

kubectl logs <Pod名称>

实时查询pod的日志

kubectl logs -f <Pod名称>

实名查询pod日志,并指定容器名称(在一个pod中有多个容器的情况下,需要再指定容器名称来查看日志)

kubectl logs -f <Pod名称> -c <容器名称>

使用logs命令的请求流程

logs -> apiserver -> kubelet -> container

标准输出在宿主机的路径

/var/lib/docker/containers/[container-id]/[container-id]-json.log

举例

使用kubectl gets pod -o wide 命令找到pod的部署节点

[root@liuxiaolu-master ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES blog-deployment-bb75fc57d-crghh 1/1 Running 0 2d 10.244.17.149 liuxiaolu-node <none> <none> blog-deployment-bb75fc57d-fxcvq 1/1 Running 0 2d 10.244.17.150 liuxiaolu-node <none> <none> blog-deployment-bb75fc57d-hkt78 1/1 Running 0 2d 10.244.17.148 liuxiaolu-node <none> <none> nginx-deployment-64c9d67564-448pm 1/1 Running 0 5d1h 10.244.17.142 liuxiaolu-node <none> <none> nginx-deployment-64c9d67564-nfrmc 1/1 Running 0 5d1h 10.244.17.143 liuxiaolu-node <none> <none>我们以blog-deployment-bb75fc57d-crghh为例,这个pod是部署在liuxiaolu-node节点上的,我们去到node节点的服务器,输入命令

docker ps找到容器container-id[root@liuxiaolu-node ~]# docker ps | grep blog-deployment-bb75fc57d-crghh 05dcbaf30466 85d9615cae7c "/docker-entrypoint.…" 2 days ago Up 2 days k8s_blog_blog-deployment-bb75fc57d-crghh_default_95fcb2cb-09ca-44ad-bd32-320bea53df99_0 69675c8aa452 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 2 days ago Up 2 days k8s_POD_blog-deployment-bb75fc57d-crghh_default_95fcb2cb-09ca-44ad-bd32-320bea53df99_0输入命令后,我们会发现有两个容器。其中以

k8s_POD打头,并且是"/pause"状态,负责管理网络。另一个是实际的业务程序,所以我们就找到了被截取的容器id3a5d8c23c2ef然后我们可以输入cat /var/lib/docker/containers/3a5d8c23c2ef,并按tab键,进行容器id补全,就能拿到完整的容器id,然后按规则拼接文件名,就能拿到该容器产生的全部日志了

这里有一个问题,如果在pod特别多的时候,我们如果还这样一个一个的收集日志,那将会是非常痛苦的。所以这也就引出了一个问题,如何在多容器下去采集日志?

我们的思路是将多个pod的日志汇集到宿主机的一个目录下

我们以nginx:1.8为例,日志目录为/var/log/nginx

apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: # 容器名称 - name: some-nginx # 镜像 image: nginx:1.8 volumeMounts: # 使用name为logs的volume - name: logs # 将容器内的/var/log/nginx目录挂载到指定name的volume mountPath: /var/log/nginx volumes: # 定义name为logs的volume - name: logs # 使用emptyDir数据卷将日志文件持久化到宿主机上 emptyDir: {}然后我们就可以在宿主机的

/var/lib/kubelet/pods/<pod-id>/volumes/kubernetes.io~empty-dir/logs/access.log目录下,找到日志了